Download a small sample 19 of. The Parquet-format project contains all Thrift definitions that are.

Download the complete SynthCity dataset as a single parquet file.

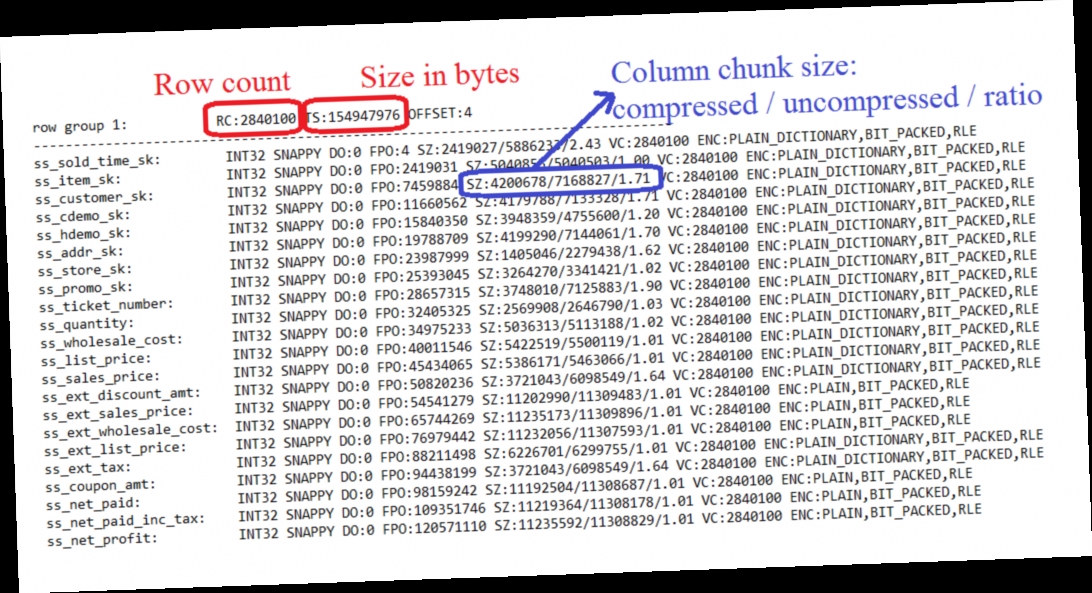

. Parquet is a columnar storage format that supports nested data. Readers are expected to first read the file metadata to find all the column chunks they are interested in. Apache Parquet is an open source column-oriented data file format designed for efficient data storage and retrieval.

This dataset is stored in Parquet format. Download Microsoft Edge More info Table of contents Exit focus mode. Kylo is a data lake management software platform and framework for enabling scalable enterprise-class data lakes on big data technologies such as Teradata Apache Spark andor.

There are about 15B rows 50 GB in total as of 2018. The following are 19 code examples of pyarrowparquetParquetFileYou can vote up the ones you like or vote down the ones you dont like and go to the original project or source file by. This is what will be used in the examples.

Parquet metadata is encoded using Apache Thrift. If clicking the link does not download the file right-click the link and save the linkfile to your local file system. All DPLA data in the DPLA repository is available for download as zipped JSON and parquet files on Amazon Simple Storage Service S3 in the bucket named s3dpla.

Apache parquet file sample. Apache parquet sample file download. Column names and data types are automatically read from Parquet.

Spark - Parquet files. Click here to download. Sample Parquet data file citiesparquet.

This will invalidate sparksqlparquetmergeschema. When it comes to storing intermediate data. The files must be CSV files with a comma separator.

This is not split into seperate areas 275 GB. The larger the block. Get the Date data file.

It provides efficient data compression and. The block size is the size of MFS HDFS or the file system. Configuring the size of Parquet files by setting the storeparquetblock-size can improve write performance.

Then copy the file to your temporary. To get and locally cache the data files the following simple code can be run. Create notebooks and keep track of their status here.

Read in English Save. The first 2 l. Sample snappy parquet file.

This file is less than 10 MB. This will load all data in the files located in the folder tmpmy_data into the Indexima table defaultmy_table. The columns chunks should then be read sequentially.

Basic file formats - such as CSV JSON or other text formats - can be useful when exchanging data between applications. This dataset contains historical records accumulated from 2009 to 2018. File containing data in PARQUET format.

Big parquet file sample.

The Parquet Format And Performance Optimization Opportunities Boudewijn Braams Databricks Youtube

Parquet Data File To Download Sample Twitter

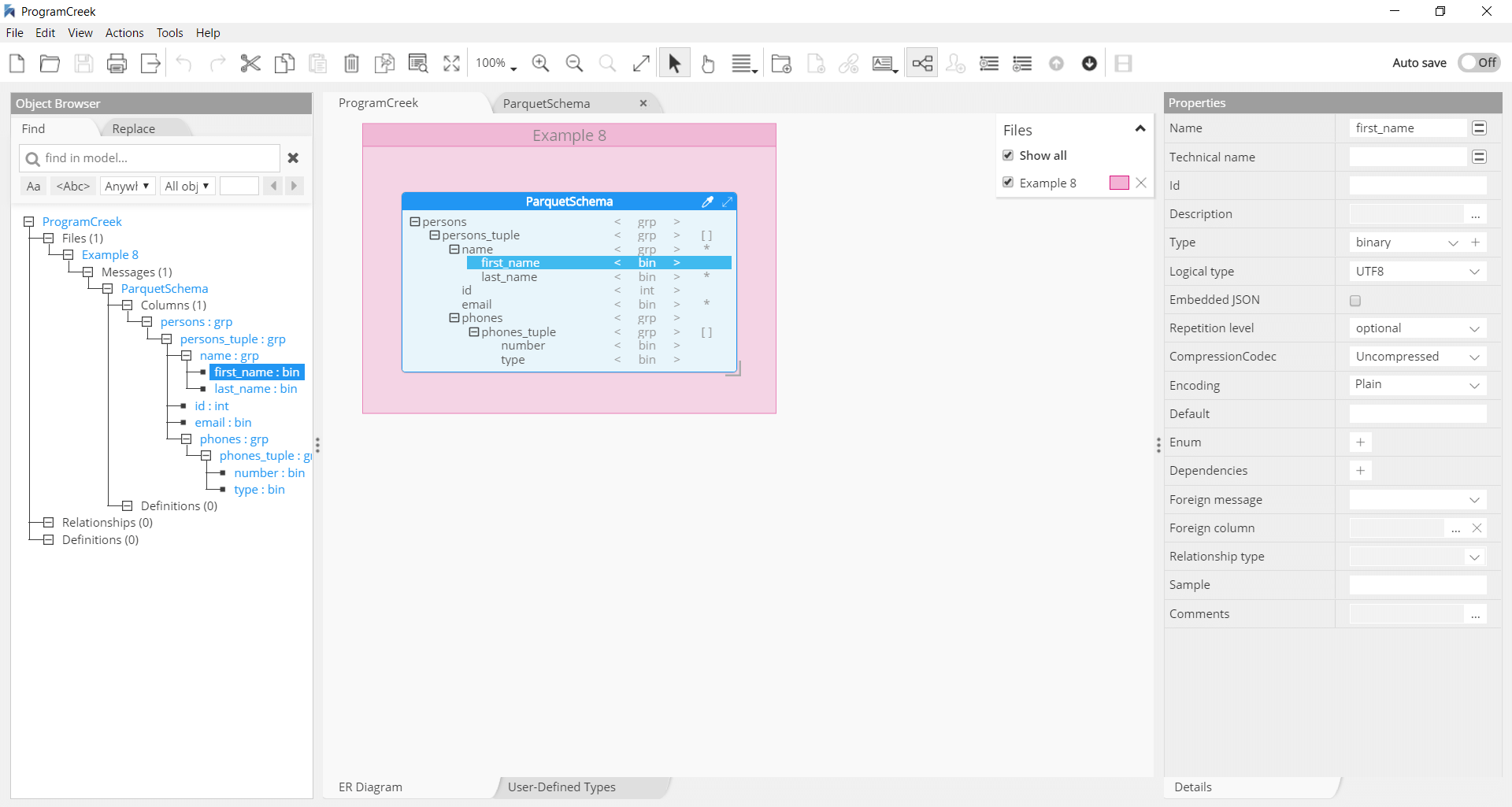

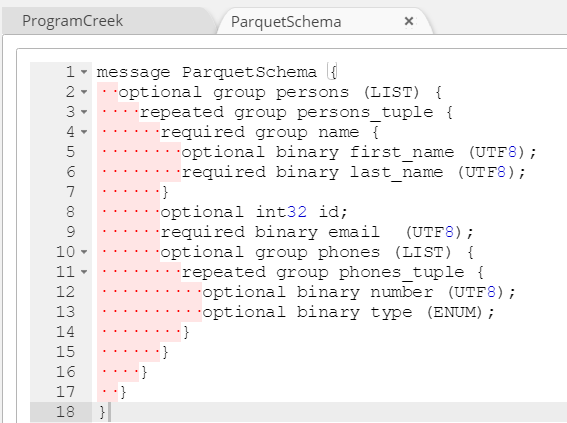

How To Generate Nested Parquet File Format Support

Diving Into Spark And Parquet Workloads By Example Databases At Cern Blog

How To Read And Write Parquet Files In Pyspark

Convert Csv To Parquet File Using Python Stack Overflow

Chris Webb S Bi Blog Parquet File Performance In Power Bi Power Query Chris Webb S Bi Blog

How To Generate Nested Parquet File Format Support

Writing Parquet Records From Java

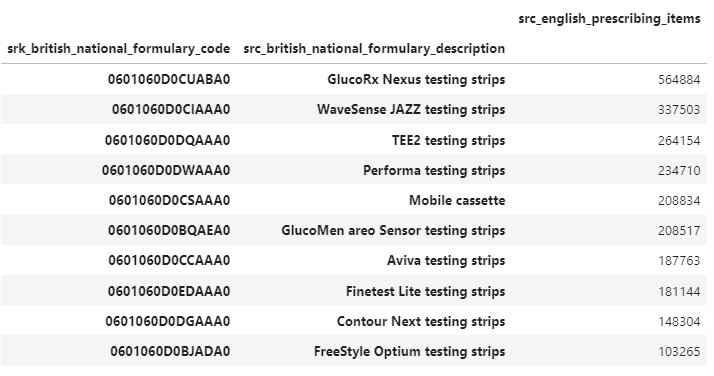

Querying Large Parquet Files With Pandas Blog Open Data Blend

How To Read And Write Parquet Files In Pyspark

Read And Write Parquet File From Amazon S3 Spark By Examples

How To Move Compressed Parquet File Using Adf Or Databricks Microsoft Q A

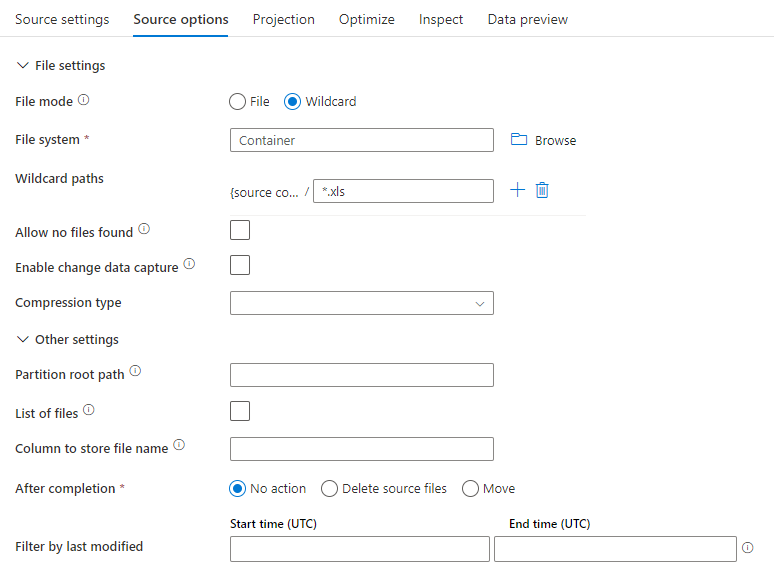

Parquet Format Azure Data Factory Azure Synapse Microsoft Docs

4 Setting The Foundation For Your Data Lake Operationalizing The Data Lake Book

Spark Read And Write Apache Parquet Spark By Examples

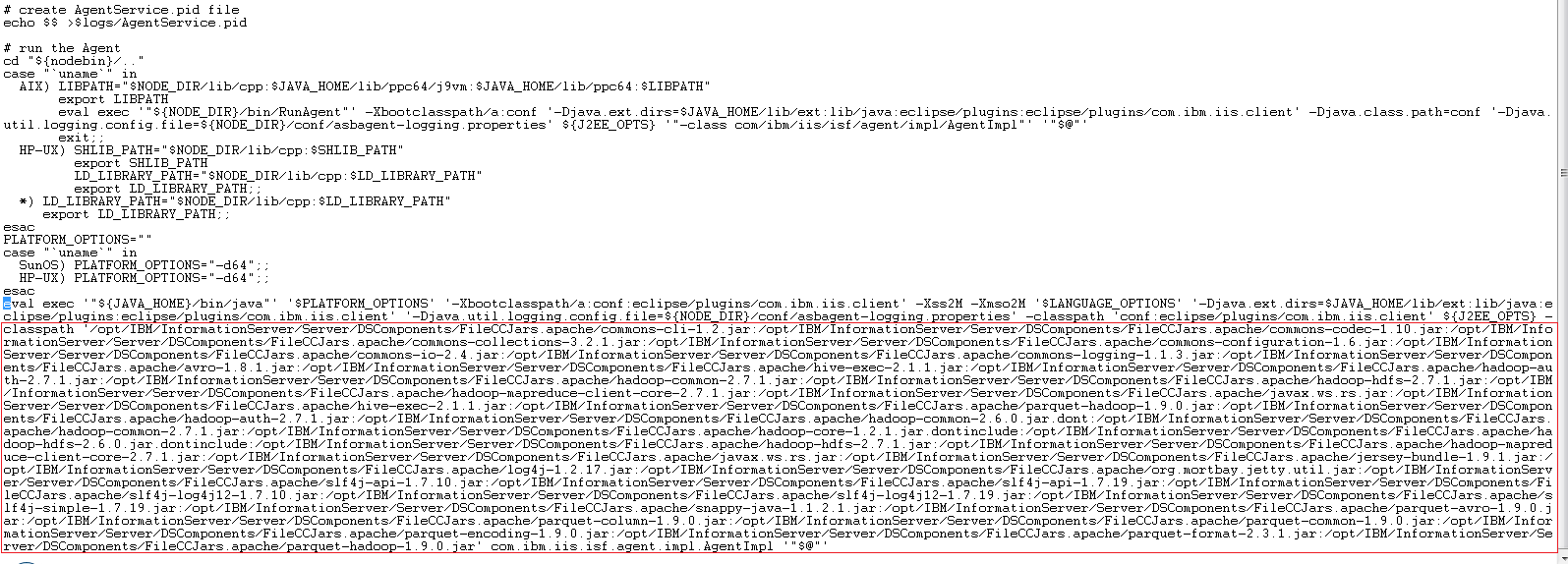

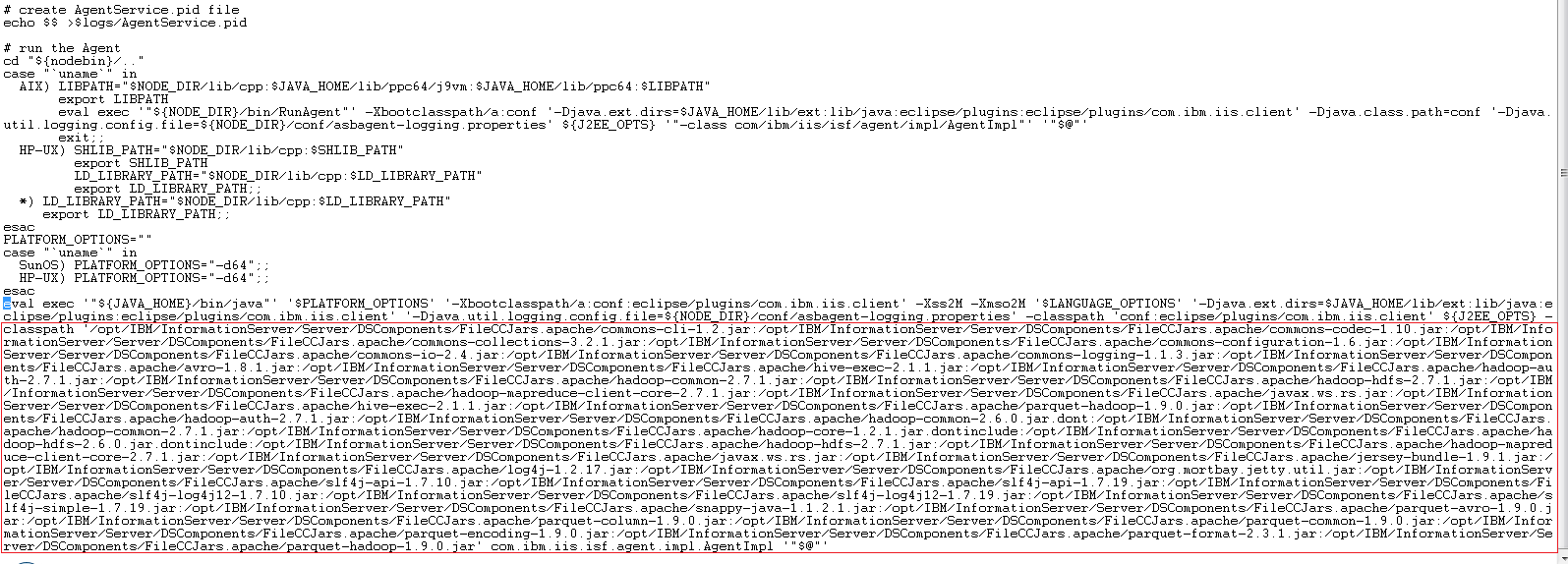

Steps Required To Configure The File Connector To Use Parquet Or Orc As The File Format

Diving Into Spark And Parquet Workloads By Example Databases At Cern Blog

0 comments

Post a Comment